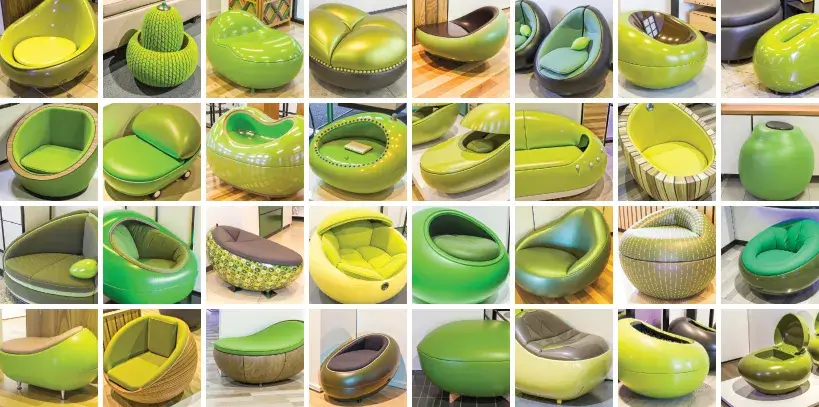

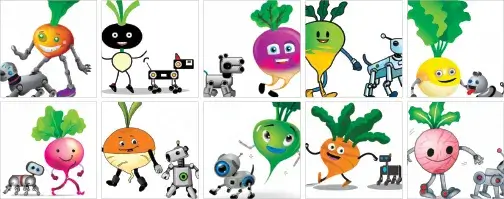

OpenAI’s latest text-image model, a successor to DALL·E and GLIDE, is trained to generate original images in response to text descriptions. Above the model iterates on the prompt “an armchair in the shape of an avocado.” Below, the model generates images for the prompt “an illustration of a happy turnip walking a dog-robot.” Given the same prompt repeatedly, the model produces novel responses. None of these designs or images existed until the model created them. For more, see Ermira Murati’s essay, “Language & Coding Creativity,” in the Spring 2022 issue of Dædalus.

By Heather M. Struntz, Assistant Editor at the Academy

The Spring 2022 issue of Dædalus on “AI & Society,” guest edited by Academy member James Manyika, explores the many facets of AI: its technology, its potential futures, its effects on labor and the economy, its relationship with inequalities, its role in law and governance, its challenges to national security, and what it says about us as humans. What follows are a few additional remarks and insight from the volume’s guest editor on the collection.

What motivated you to organize this volume? Why is this moment significant, and what are the stakes of the questions being explored?

After the birth of AI in the 1950s, the field went through a period of excitement about the possibilities of this new technology. However, what followed was what many came to call AI’s winter in the 1970s and 1980s, a period during which AI did not live up to expectations, even though many useful developments emerged. But the last decade has seen a rapid succession of breakthrough results in AI that suggest the possibility of more powerful AI, coupled with the adoption and use of its associated techniques in many arenas of society, including in products and services that individuals, organizations, and governments now increasingly utilize routinely: for example, recommendation systems, search, language translators, facial recognition, chatbots for customer service, fraud detection, decision support systems, and tools for research. This has been the result of a series of progressions in AI’s development that began to gather momentum in the early 1990s, and that accelerated in the 2000s as the algorithms got better, the data to train them became more available, and the computer hardware became more powerful. Today, we are at a particularly significant moment in AI’s development: as the possibilities and benefits of more powerful AI grow for users and society, we are also confronting real questions and concerns about its varied implications. The stakes are high in both directions: The availability of more useful systems could assist users – individuals and organizations – in day-to-day activities, improve how things work across many sectors and facets of society, contribute to the economy, lead to new discoveries, and help tackle some of humanity’s greatest challenges, such as in the life sciences and climate science. And in the other direction, we have concerns about bias, privacy, misuse, unintended consequences, and AI’s impact on jobs and inequality, on the law and institutions, not to mention on security and great power competition. For all these reasons, and more, this seemed like a good moment to assess where we are in AI’s development, and to consider its impact on society.

What consideration went into choosing the authors who would make up this issue?

When it came to inviting contributors to this volume, we sought authors whose expertise spanned the various aspects of AI’s development, from machine vision, to natural language processing, to robotics, as well as the software and hardware systems, and those looking at AI’s intersection with various aspects of society, such as the economy, law, policy, national security, and philosophy. At the same time, we did not want the volume to turn into a collection on “technology & society” more generally and so we tried to stay with topics and issues closely related to AI and its associated technologies. Second, we sought authors from among those considered to be at the frontier in their respective fields with respect to the topics in this volume, including leading AI scientists, technologists, social scientists, humanists, and public officials. In addition, we wanted authors who would bring a diversity of views on AI’s progress and on its impact on society. There were many contributors I wanted to include, and this is how the volume grew until we just could not add anymore. I am grateful to this distinguished group of contributors – many of them members of the Academy – who accepted my invitation and made time for these essays – it was such an honor to work with them. With a subject as broad as “AI & Society,” there are, without doubt, many more topics and views that are missing in this volume – for which I take responsibility. I hope the extensive bibliography and endnotes throughout this volume provide useful references for further exploration and views.

I should also say that I was quite envious when I discovered that for the 1988 Dædalus issue devoted to AI, the authors had met in person as a group for discussions and debates, including at the Los Alamos National Laboratory – doing this all through COVID afforded no such opportunities.

Expanding on a question you posed in your introduction, is it all worth it? What are one or two things that we need to get right in order to answer that question with a yes?

I believe it is worth pursuing AI for its exciting and beneficial possibilities. However, my affirmative view is contingent on us also getting several aspects right. There is indeed a long list of things we must get right, including those that many authors in this volume highlight and that I mention in the introduction. But here if I am limited to one or two, I’ll mention two such challenges. First, that as we build more powerful AI, we ensure that it is safe and does not cause or worsen individual or group harms (such as through bias), and can earn public trust, especially where societal stakes are high. A second set of issues to get right is making sure we focus AI’s development and use it where it can make the greatest contributions to humanity, such as in health and the life sciences, climate change, and overall well-being, and deliver net positive socioeconomic outcomes (such as with respect to jobs, wages, and opportunities). Here it is especially important, given the likelihood that without purposeful attention, the characteristics of the resulting AI and its benefits could accrue to a few individuals, organizations, and countries, likely those leading in its development and use. But it is also worth remembering that the issues to get right are not static and will continually evolve as AI becomes more capable, as our use of it evolves, and as we better understand its impacts, especially the second-order effects, on society.

What did the issue not include? Or, what other discussions need to be had?

There are so many more dimensions of artificial intelligence the issue could have focused on or devoted more time to, such as a discussion on the role that AI is playing in advancing research in the sciences and social sciences. Another discussion that is not fully explored is related to the governance of AI itself, especially with respect to safe and responsible development, deployment, and use, and especially when these involve individuals, organizations, companies, and governments. This is particularly important given the wide range of use scenarios, each involving different stakes, as well as normative considerations. We could have spent more time on the international dimensions of advancements in AI, especially for countries not at the current forefront of AI’s development and use. Yet another would have been a deeper discussion on the foundational issues in AI’s development, the numerous still-hard problems, including the development of more powerful AI, the sufficiency of the current approaches, and the possibility of artificial general intelligence.

Any final takeaways?

I have learned a lot from editing this volume, including from the authors and many others I talked to and whose work I read along the way (see the endnotes in my introduction to the volume). Perhaps one thought all this has reinforced for me is not only that we have to get AI right because the stakes in both directions are high, but that beyond the technologies themselves, much of getting AI right is really about the choices we as society make. Here I mean the choices we make as society inclusive of developers of AI and deployers or users of AI – individuals, companies, organizations, and governments – whether affected by it beneficially or otherwise. As well as what and who informs those choices, the goals we set, and the systems we put in place, including incentives, economic and otherwise, to guide to the outcomes we collectively want. And how these choices must have both beneficial uses and outcomes at their core, as well as purposeful attention to the potential harms, some already here, including uneven outcomes for society. This is what we have to get right.

“AI & Society” is available on the Academy’s website. Dædalus is an open access publication.

James Manyika

James Manyika, a Fellow of the American Academy since 2019, is Chairman and Director Emeritus of the McKinsey Global Institute. He is a Distinguished Fellow of Stanford’s Human-Centered AI Institute and a Distinguished Research Fellow in Ethics & AI at Oxford, where he is also a Visiting Professor. In early 2022, he joined Google as its first Senior Vice President for Technology and Society.

Spring 2022 issue of Dædalus on “AI & Society”

Contents

Getting AI Right: Introductory Notes on AI & Society

James Manyika

On beginnings & progress

“From So Simple a Beginning”: Species of Artificial Intelligence

Nigel Shadbolt

If We Succeed

Stuart Russell

On building blocks, systems & applications

A Golden Decade of Deep Learning: Computing Systems & Applications

Jeffrey Dean

I Do Not Think It Means What You Think It Means: Artificial Intelligence, Cognitive Work & Scale

Kevin Scott

On machine vision, robots & agents

Searching for Computer Vision North Stars

Li Fei-Fei & Ranjay Krishna

The Machines from Our Future

Daniela Rus

Multi-Agent Systems: Technical & Ethical Challenges of Functioning in a Mixed Group

Kobi Gal & Barbara J. Grosz

On language & reasoning

Human Language Understanding & Reasoning

Christopher D. Manning

The Curious Case of Commonsense Intelligence

Yejin Choi

Language & Coding Creativity

Ermira Murati

On philosophical laboratories & mirrors

Non-Human Words: On GPT-3 as a Philosophical Laboratory

Tobias Rees

Do Large Language Models Understand Us?

Blaise Agüera y Arcas

Signs Taken for Wonders: AI, Art & the Matter of Race

Michele Elam

On inequality, justice & ethics

Toward a Theory of Justice for Artificial Intelligence

Iason Gabriel

Artificial Intelligence, Humanistic Ethics

John Tasioulas

On the economy & future of work

Automation, Augmentation, Value Creation & the Distribution of Income & Wealth

Michael Spence

Automation, AI & Work

Laura D. Tyson & John Zysman

The Turing Trap: The Promise & Peril of Human-Like Artificial Intelligence

Erik Brynjolfsson

On great power competition & national security

AI, Great Power Competition & National Security

Eric Schmidt

The Moral Dimension of AI-Assisted Decision-Making: Some Practical Perspectives from the Front Lines

Ash Carter

On the law & public trust

Distrust of Artificial Intelligence: Sources & Responses from Computer Science & Law

Cynthia Dwork & Martha Minow

Democracy & Distrust in an Era of Artificial Intelligence

Sonia K. Katyal

Artificially Intelligent Regulation

Mariano-Florentino Cuéllar & Aziz Z. Huq

On seeing rooms & governance

Socializing Data

Diane Coyle

Rethinking AI for Good Governance

Helen Margetts

Afterword: Some Illustrations

James Manyika