Technology & the Dynamics of Care for Older People

The United States, like many countries, faces a contradiction: a growing number of older adults need care, yet the workforce on which this care depends is underpaid, marginalized, and relegated to the bottom ranks of the health care system. In response, technology presents an appealing potential solution for worried families hoping to remotely monitor “aging in place,” for care homes facing labor shortages, and for the technology companies that stand to profit. But the affordances of these technologies, the visions embedded within them, and their implications for workers, families, and older adults need specification. Drawing on the social scientific and medical literature on care, aging, and technology, this essay investigates several questions. Who is providing care, both paid and unpaid, and how does the introduction of technology into care provision affect each of the participants in the care network? What are the different types of technology that can aid care? What challenges and concerns do these technologies raise? And finally, how might we address these challenges moving forward?

Mary is eighty-six years old and lives alone.1 Her husband died six years ago. Her cognition remains normal, but she is homebound because of mobility and balance problems and she falls frequently. She cannot prepare meals and needs physical assistance to dress and wash. Her daughter shops for her and visits daily but works full time, so a part-time paid carer supports her in the mornings.

Isaiah is eighty years old, is widowed, and lives alone. His son lives two hundred miles away. He is physically independent but has a progressive decline in cognitive capacity due to dementia. He sometimes leaves the house and cannot find his way back. He has difficulty with most household tasks, including meal preparation and domestic chores, but can still dress and wash himself. His son pays a carer to visit three times per week to provide support and supervision.

Sofia is eighty-eight years old and has advanced dementia and physical frailty. She lives in a nursing home and requires full assistance to dress and wash and supervision to take meals. She is bilingual but now mainly speaks Spanish. Two people are needed to help transfer her from bed to chair and to wash and dress her. She recognizes carers but not family. She has urinary incontinence. She often cries out for help but is easily reassured by carers.

Twenty-five years from now, twenty-five million Americans like Mary, Isaiah, and Sofia will be living with frailty, a condition associated with reduced physical ability, dementia, and increased dependency. By 2050, the number of people living in the United States who are sufficiently dependent to require support in activities of daily living is expected to triple.2 Concurrently, the birthrate is declining, thereby reducing the absolute and relative numbers of younger people in the population and increasing the relative numbers of those who are old. As a result, more older people are aging without kin to provide care: between 2010 and 2050, the number of kin is estimated to drop from seven to three per older adult.3 The need for care is great and the homecare workforce on which this transition depends is underpaid, marginalized, and relegated to the bottom ranks of the health care system.4 In response to this looming crisis, technology presents an appealing potential solution for worried families hoping to remotely monitor “aging in place,” for care homes facing worker shortages, and for the technology companies that stand to profit.

Given the growing need for both residential and home care, technologies such as monitoring systems, care robots, and digital companions are increasingly marketed as not only a potential form of worker augmentation but also worker replacement.5 As sociologist Allison Pugh notes, visions of technology as “better than humans” or humans and technology as “better together” undergird the development of many new sociotechnical systems.6 And yet studies of such technologies in practice reveal that visions of replacement often function as mirage: human care is still crucial, even as technology increasingly mediates it.

In this essay, we focus on several key questions relating to older adults like Mary, Isaiah, and Sofia, and the problems they and their carers face. Who is providing care, both paid and unpaid, and how does caring affect them? What are the different types of technologies that can aid care? What challenges and concerns do these different types of technology raise? And finally, how might we address these challenges moving forward? The implications of the use of care technology are of great import for older people, for their families, and for care workers. We argue against both overly optimistic and dystopian images of technology, urging instead for a clear assessment of the structural problems at hand.

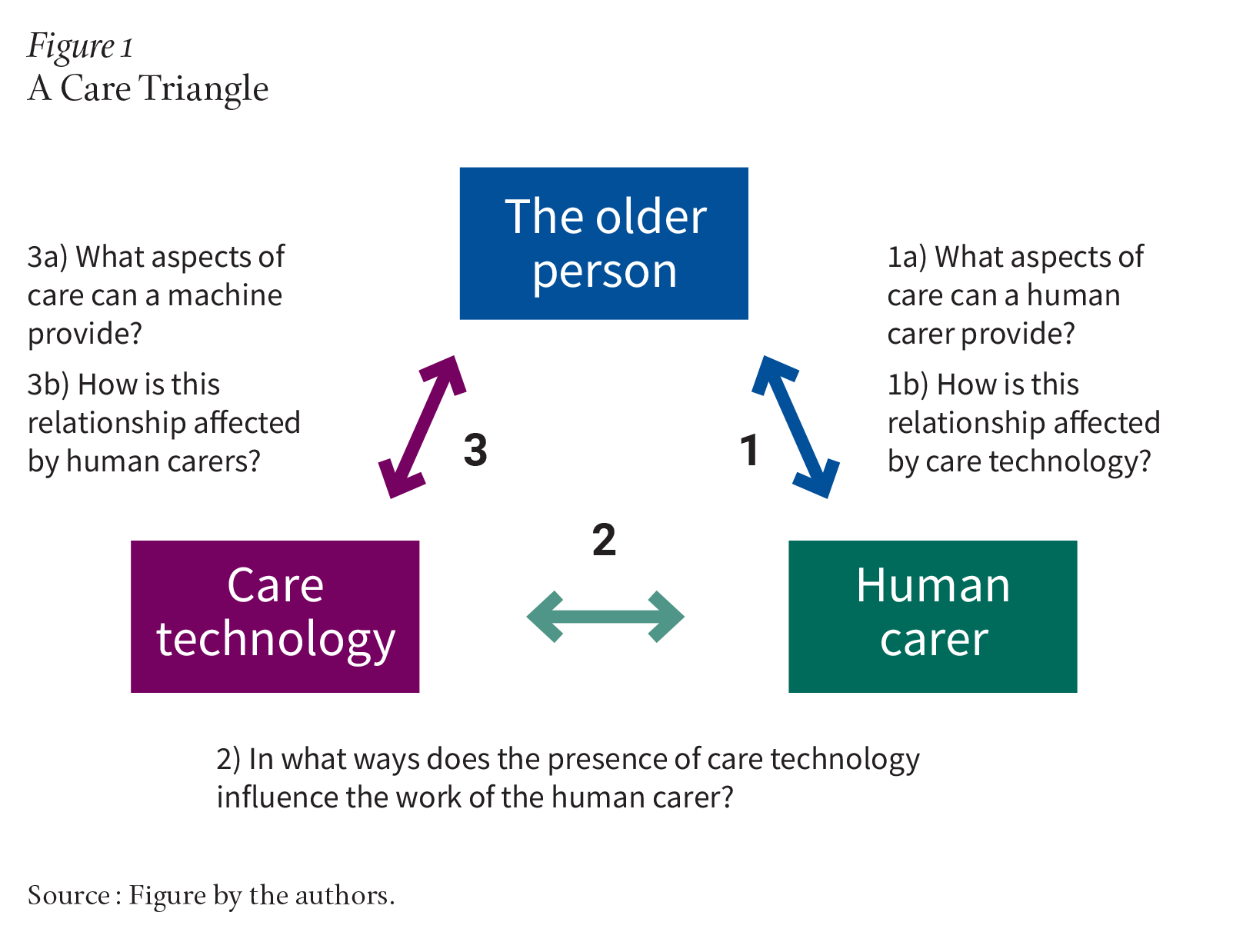

In the simplest models of care, two individuals are involved: the care recipient and the carer. Older people, when care recipients, have traditionally been most likely to receive care from their spouses or partners, their siblings, their children or grandchildren, and their extended family, working alone or in complex networks together. The addition of care technology to this dyad creates new dynamics, interactions, and questions (see Figure 1).

According to a report from the AARP, family carers provide an estimated $600 billion of unpaid care, rivaling or exceeding the market capitalization of Fortune 100 companies such as Visa (about $598 billion) and United Health (about $485 billion).7 In most such models, women are much more likely to be carers than men, with both wives and daughters taking on the majority of unpaid care work.8

There is synergy between the behaviors and well-being experienced by older people and carers. The act of caregiving itself can have salutary effects for carers through altruism, feeling that they are contributing to their loved one, and serving as a role model for the next generation. These acts of caregiving can strengthen the interpersonal relationship between care recipients and carers, leading to downstream benefits for recipients such as better health outcomes, lower mortality, and less distress.9 In this way, human caregiving can benefit both the person who provides care and the person who receives it.

Such benefits are counterbalanced by harms. The United States’ high levels of stress, social isolation, and loneliness are particularly pronounced among the fifty-three million family carers who shoulder significant responsibilities of managing chronic and serious health conditions among adults.10 The relationship between care recipients with chronic conditions and their carer can be undermined as identities shift, patients’ functional status deteriorates, and carers have to take on more responsibilities. Studies show that patient-carer dyads managing nondementia chronic conditions experience communication barriers, relationship strain, and conflict.11 Ideal care models, technology-enabled or otherwise, would therefore mitigate the negative dimensions of caregiving on the individuals and their relationships, while enhancing the positive aspects of the same.

One limitation of the current literature is that it emphasizes the experiences of Western, predominantly white, heterosexual families. Yet caregiving is embedded in cultural norms and mores; role expectations based on gender and filial ties remain much more powerful among, for example, South Asian (such as Indian or Pakistani) families. Cultural factors also influence perceptions of certain diseases: for instance, cancer, dementia, and mental health conditions are stigmatized among individuals from South Asian countries.

As a result, family carers in these communities may be particularly vulnerable to poor outcomes. For example, in a national survey, nearly half of the family carers who identified as Asian American or Pacific Islander reported that they had no choice other than to be a carer if a family member was in need, and that they found caregiving “emotionally stressful.”12

There are several things we know about those paid to provide care to older people but who are not doctors, nurses, or other relatively high-status professionals. First are the descriptive statistics. Those doing this work, in the home and in institutions, are growing in number (see Table 1), are low paid, and have a huge variation in background preparation and qualifications. Typically, they may possess a high school education, and some have received some additional training (see Table 2).13 They are disproportionately women of color, often immigrants, and almost always below the poverty line in earnings. That is the recent picture in the United States.14 Some are family members, employed and reimbursed directly by the care recipient or by the state via a stipend.15 And there is reason to believe these descriptors are comparable for the rest of the industrialized capitalist world.16

| Care Work Occupations | All Workers, 2000 | All Workers, 2014–2016 (ACS) | Employment Change, 2000 to 2014–2016 | BLS Projected Employment Change, 2016–2026 Frey | Frey and Osborne Projected Automation Impact | ||||||||

Number | % Women | Number | % Women | Number | % | Number | % | Men | Women | Automation | Men | Women | |

| Nursing, Psychiatric, and Home Health Aides | 1.660 | 87.5 | 2.054 | 87.5 | 393,430 | 24 | 613,100 | 24.0 | 76,669 | 536,431 | 0.40 | (96,677) | (616,542) |

| Personal Care Aides | 281,198 | 87.6 | 1.372 mil | 83.3 | 1.091 mil | 388 | 777,600 | 38.6 | 129,859 | 647,741 | 0.74 | (93,091) | (401,901) |

| Childcare Workers | 1.257 mil | 95.3 | 1.264 mil | 93.7 | 6,321 | 1 | 84,300 | 6.9 | 5,284 | 78,989 | 0.08 | (6,654) | (99,499) |

3.199 mil |

| 4.690 mil |

| 1.484 mil | 46 | 1.475 mil | 31.4 | 206,528 | 1.184 mil |

| (189,768) | (1.018 mil) | |

ACS—American Community Survey; BLS—Bureau of Labor Statistics. Source: Institute for Women’s Policy Research, Women, Automation and the Future of Work (Institute for Women’s Policy Research, 2019), 62. For methodology, see ibid., “Methodological Appendix,” 75. | |||||||||||||

Second, while there is a large literature on care work, especially care of the young, it tends to focus on the double burden of women in the family who perform a disparate share of the emotional and unpaid labor in the household. While this is undoubtedly an important part of the story, of more interest for our purposes are the hazards that workers experience at their jobs. Care work can involve heavy lifting as well as verbal and sexual abuse.17 It appears to be particularly dangerous and difficult when the job takes place in the personal home of the individual receiving the care. As a National Research Council study concludes:

Health care professionals who practice in the home are more susceptible to a range of injuries and hazards because, unlike medical facilities, the home environment is more variable and generally not designed for the delivery of health care services. For example, although such tasks as lifting, pushing, and pulling are often performed by health care professionals, in the home they have less human assistance, usually no ergonomically designed equipment, and the environment is typically less appropriate (e.g., small spaces, crowded rooms) than in institutional health care facilities. Consequently, tasks may be performed in awkward positions or involve more strain and exertion—and may thereby result in injury. Formal caregivers whose jobs involve substantial time on personal care tasks, such as transferring, bathing, and dressing, have been found to incur among the highest rates of musculoskeletal injuries.18

Language and cultural barriers that make communication difficult between the carer, supervisors, medical professionals, family, and the cared-for add further hazards for care workers. Given how important transparent communication is for trusting relationships, these blockages can have significant consequences for the quality of care.19

Third is what we know about the employment status of these workers. Some are hired directly by the family, but many work for firms or agencies that contract with the family or medical facility. Some paid carers are family members; others are hired to replace or support family input. Part of the payment generally comes from government, through social insurance programs for, or the government pensions of, the elderly.20 The effect is often considerable bureaucratic complexity for those managing the care, and particularly for those carers who lack the skills to navigate the system or who are unaware of their rights. The current system also opens the door to financial and physical abuse of care recipients by opportunistic and unscrupulous carers.21

Given the circumstances of family and paid carers for the elderly, certain types of technology could prove to be a significant boon to improving the quality and safety of the work and simplifying their bureaucratic and communications burden. And technological aids to care are increasingly promoted as potential solutions to the complex set of structural problems around paid home care provision. This raises questions about the ways technology might replace, mediate, or augment human input, as well as their potential to improve or subvert established caring models. There is both limited evidence of efficacy and acceptability and even less attention to the effects of technology, such as robotic assistants, voice assistants, and monitoring systems, on existing human relationships within the care dynamic.22

We believe there are three potential drivers underpinning the current increased interest in AI/robotics and other technologies in care provision. First, population aging imposes economic and fiscal challenges to the government, rooted in the changing balance between the economically productive and nonproductive sectors of the population. Second, family perspectives are changing, raising questions about who is directly responsible for care of the old. The third impetus toward technology is the tech industry itself, which is always seeking new placement for its products and new streams of profitable revenue. These privatized and market options could reflect the newest expansion of the market into intimate life and an attempt to cut costs on the part of care facilities and insurers.23

Given the potential benefits to carers and receivers of care as well as the financial interests of stakeholders, tech optimism is widespread. Yet new technologies come with significant potential risks, including for an already vulnerable workforce.

| Quick Facts: Home Health and Personal Care Aides | |

| 2023 Median Pay | $33,530 per year; $16.12 per hour |

| Typical Entry-Level Education | High school diploma or equivalent |

| Work Experience in a Related Occupation | None |

| On-the-Job Training | Short-term on-the-job training |

| Number of Jobs, 2022 | 3,715,500 |

| Job Outlook, 2022–2032 | 22% (much faster than average) |

| Employment Change, 2022–2032 | 804,600 |

Source: U.S. Bureau of Labor Statistics, “Home Health and Personal Care Aides,” in Occupational Outlook Handbook (U.S. Department of Labor, 2022). | |

While technological aids can improve conditions for all those in the care network, they can also create new tensions and problems.24 To understand the implications—positive and negative—of technologies, it is necessary to distinguish their intended purpose, their affordances, and the visions of automation they embody.

There are meaningful differences among telehealth software, home monitoring systems, and companion robots: in the problems they purport to solve, the involvement of human workers, and their imagined affordances.25 The term affordance within the communication and media studies literature refers to the possibilities technological artifacts provide to a user.26 The term imagined affordances acknowledges the changing nature of these uses and possibilities; affordances are dependent on the user, designer, and specific social context. We outline some potential affordances here, with the understanding that these may shift depending on the visions of the designers and the ways that carers and care recipients use these technologies in everyday life.

Take the example of a voice assistant like Alexa, which has often been portrayed as akin to a feminized secretary in Amazon’s advertisements.27 Isaiah, the eighty-year-old man with dementia from our earlier vignette, uses Alexa as a glorified speaker, engaging with the voice assistant to listen to music. However, Isaiah’s son, who lives in another state, installed the device to monitor his father’s daily interactions with Alexa via the mobile app; if his father is speaking frequently to Alexa, he feels relatively reassured about his well-being. Isaiah does not understand that he is being monitored in this way. For the homecare worker who comes to the house three times a week, the device presents a threat of surveillance: She feels uncomfortable at work knowing that Alexa (and by extension her employer) is “listening.” She has no access to the data recorded by the voice assistant, though John’s son can access it all. Such contradictions and varied affordances are important to consider as they are often linked to the risks of these technologies, particularly for workers.

Various technologies propose to alter the care process in different ways; embedded in them are both the problems that they purport to solve, such as loneliness, safety, or the high cost of in-person medical care, as well as visions of how care might be transformed. These problems themselves may be fuzzy and contested; as anthropologist Lucy Suchman notes, technologies branded under the banner of “AI” often provide solutions before defining the problems.28 In Table 3, we outline different areas of care, corresponding technological aids, the problems these technologies claim to solve, current commercial examples, their imagined affordances, and the vision of automation embedded within them. Some technologies span multiple categories, with different affordances allowing for different care needs to be met.

| Type of Care | Types of Technology | Problems They Purport to Solve | Current Commercial Examples | Imagined Affordances | Vision of Automation |

| Companionship | Companion robots, animatronic companion animals, voice assistants | Loneliness, isolation | Paro, Joy for All Companion Pets, Alexa, Siri | Conversation (for voice enabled devices), touch (for physical companion devices), sound (for physical companion devices) | Augmentation, replacement |

| Bio-monitoring | Wearables, monitoring systems, health care robots | Monitoring heart rate, blood pressure, blood sugar levels, other vital signs | Fitbit, Apple Watch | Data collection, data analysis, health assessments, touch, fitness tracking | Mediation |

| Monitoring and surveillance | Home monitoring systems, fall detection systems (in homes and nursing homes), “granny cams” (employed in nursing homes to monitor for elder abuse) | Safety, security | Alexa Together, Rest Assured, Ayesafe | Visual monitoring, audio monitoring, movement monitoring, predictive analysis workplace surveillance (for home care workers), intimate surveillance (for older people) | Mediation |

| Health care appointments / talk therapy | Telecare, Telehealth | High costs of health care and mental health care, physical barriers to seeing providers in-person | Talkspace (mental health), Sesame (health), Teledoc (health) | Ability to visibly see care provider, quick access to care, choice of whether to use video, portability and mobility | Mediation |

| Guidance, reminders | Automated medicine dispensers, voice assistants, digital assistants | Forgetfulness around routines such as medication | Siri (reminders), Hero Health (medication dispenser) | Smart phone integration, audiovisual reminders, customization, carer control | Replacement, mediation |

| Physical care | Care bots, mobility devices, exoskeletons, fallbags (wearable airbags for falls) | Physical barriers to care, recovering from falls, discrepancies in size between carer and older person | Robo-bear | Lifting, movement, touch/haptic interaction | Augmentation |

Source: Table by the authors. | |||||

The affordances of different types of care technologies relate to three visions of the future of care work: replacement, mediation, and augmentation.

In replacement visions, technology aims to replace a human care worker. However, ethnographies of automated systems demonstrate that the replacement vision is much more complex; human labor is often essential to their maintenance.29 Labor therefore changes, rather than disappears.

In mediation visions, technology does not replace or augment human input but rather mediates the care process between recipient and provider. Care is conducted via technology, but humans remain at each end of the exchange. Examples of this include telecare, through which patients and health care providers can communicate remotely, and home monitoring systems, through which carers can monitor older people.

Finally, in augmentation visions, technology is intended to assist or augment the human work of care provision. An animatronic pet is not expected to completely replace human companionship; however, it may relieve some of the burden from humans. Similarly, lifting robots are often presented as augmenting human care by assisting with difficult, laborious work while allowing human workers to attend to other tasks. However, in his ethnographic research on the implementation of these care robots, anthropologist James Wright shows that they in fact create more or different kinds of work for carers, sometimes deskilling them in the process.30 Paradoxically, human carers may shift their labor toward “care” of the technology, rather than the human recipient of care. Furthermore, care robots exemplify a significant gap between the visions of their developers and their actual capabilities in practice; their promise has been repeatedly overstated.31

Technological advances have contributed to improvements in the quality of life and health of people of all ages, including older people, by monitoring their conditions, maintaining access to family at a distance, reducing the need to travel to be seen by health care providers, and providing a form of companionship. However, the use of new technology can also introduce new problems for care and new conflicts among those receiving and providing care.

The problems come in several forms. The first is the introduction of errors. Carers, family members, and elderly patients seldom receive sufficient training with new techniques and machines, and the resources to which they can turn for help are often limited. Developing dependency on new tools can also mean a failure to learn how to do the job the machine does, which can be disastrous if the technology suddenly stops working. For example, an error in the source code of an automatic pill dispenser controlled via an app could lead to serious health consequences for the person no longer able to access their medication. An additional and very different kind of error results from misinformation. For example, relying on advice from internet forums or a large language model–powered chatbot for information may not only be misleading about the correct diagnosis or best treatment for the patient, but may also cause conflicts among those in the care triangle. This is particularly an issue when the carer is considered a person of low status and thus without authority to counter the misinformation and problematic instructions given by the care recipient or their family.

A second problem relates to technology’s role as a companion. When machines substitute for the human carer or even when they mediate that relationship, they change the interactions and dyadic human relationships that are so critical to the well-being of the patient. The unpredictability, mistakes, and emotional risks accepted by carers provide a contrast to the rationalization of this work that is present in automation.32 Cultural variations are also relevant in technology’s role as a companion. Anthropologist Jennifer Robertson has argued that, in Japan, both anti-immigrant sentiment and techno-optimistic government propaganda have led to a cultural environment that is perhaps more accepting of robot carers.33 Finally, the ethics of companionship are complicated, especially in cases of cognitive decline. For individuals like Sofia, the eighty-eight-year-old dementia patient, the ethics of companion robots become thorny. Issues of attachment, consent, and the relative value of human companionship become complex sociotechnical problems.34

A third problem has to do with the fine line between monitoring and surveillance. Monitoring of older adults has several positive aspects. It allows carers to have access to visual and auditory alerts from a distance. It can give family members assurance that there is no elder abuse occurring and the ability to track its source should it occur. But as is the case with nanny cams, monitoring can turn into surveillance, leading to inappropriate interventions by those observing. Surveillance of carers can also introduce tensions into their relationships with family members, other employers, insurance providers, and the patient. Considerable evidence exists that treating workers as untrustworthy, which surveillance by its very nature does, undermines the loyalty and good will of the worker.35 Furthermore, both private residences and nursing homes can operate as fraught “private public spaces” in which regulations regarding privacy—on the part of workers, families, and care recipients—are not easily established.36

A final concern and source of tension in the triangle is human care workers’ fear that they will be replaced by machines. Whether or not this is an overstated fear, technology will certainly play an increasing role in the carers’ work. That raises questions of what kind of care the technology can actually provide, what caregiving it can replace, and what it cannot. Analyses of the impact of technology on work often claim that jobs requiring human interactions, of which caregiving is clearly one, are likely to survive.37 The feminist literature on caregiving goes further, highlighting the importance of the relational component of care in addition to the medical or purely transactional.38 Certainly, there is evidence of attachments formed by older people to technological aids, be it a voice assistant or a companion robot. There is some cultural variation here, but nonetheless the emotional commitments of human carers will remain an important aspect of their motivation and their contribution to care as long as there are human carers in the loop.

Those who become carers are paid (or rewarded) in part because of the emotional nurturance they provide. Some come to the task with those emotional capacities, some develop them, and some simply pretend, but such capacities are an expected piece of the work for most carers.39 Technology can help make clearer the lines between emotional, medical, and technical labor, but it could also transform the work into an “IT job” and dangerously undermine the relational aspects of care that so many care recipients and carers value.

Despite clear knowledge of demographic change, the United States remains unprepared for its aging population, and particularly how it will care for its dependent old. As such, in the absence of clear new policies, we risk turning a demographic triumph into a demographic disaster.

Some disasters, such as viral pandemics, come largely unforetold, but the aging of our society is clearly understood and entirely predictable. Some may cling to notions of compression of morbidity and dramatic decline in the levels of dependency in older age, but there is as yet no evidence that this is occurring, and to believe that it will within the lifespan of the baby boomer generation is tantamount to neglect.

We believe that policymakers in the United States must: 1) reframe care and caring to reflect all aspects of their value; 2) provide well-paid, well-trained roles for human carers with clear career pathways; and 3) develop regulatory guardrails for further development and deployment of technology.

Technology can undoubtedly support care but a) its impact on human carers must be better researched and understood; b) its impact on care recipients must be better researched and understood; c) its cost effectiveness must be better researched and understood; and d) its limits must be defined, informed by empirical research.

When is substituting technology for human care unethical? The assumption that care technology should be rapidly developed for older people, but not for dependent babies and toddlers, reinforces the stereotype of burden, and betrays negative attitudes about the old.

Overzealous pursuit of care technology, promoted by a powerful tech industry and fueled by consumerism, may lead to false beliefs about its utility. The pressing societal need is to create the conditions that enable more humans to participate in care, not to hope to substitute them with technology that is ultimately found wanting. At the same time, technology is already mediating and augmenting human care—its effect on relationships can and should be studied.

Although humans appear biologically conditioned to care for their young, there is a question about whether we are similarly conditioned to care for the old.40 Care for the old varies with cultures, class, and demography, among a multitude of other factors.41 The contrast with care of the young is marked: Were an emaciated four-year-old found alone in a house, our immediate presumption of responsibility lies with the parents. When an emaciated eighty-four-year-old is found alone, does it lie as clearly with their children?

It is past time for society to transform the model of care of its old. The family, market, and government structures of the past are appropriate for neither the present nor the future.